容器技术

Docker Buildx 介绍

一次构建多处部署的镜像分发大幅提高了应用的交付效率,对于需要跨平台部署应用的场景,利用 docker buildx支持构建跨平台镜像的能力是一种比较快捷高效的解决方案。

背景

随着国产化和信创的推进,为应用适配多个操作系统和处理器架构的需求越来越普遍。常见做法是为不同平台单独构建一个版本,当用来开发的平台与部署的目标平台不同时,实现这一目标并不容易。例如在 x86 架构上开发一个应用程序并将其部署到 ARM 平台的机器上,通常需要准备 ARM 平台的基础设施用于开发和编译。

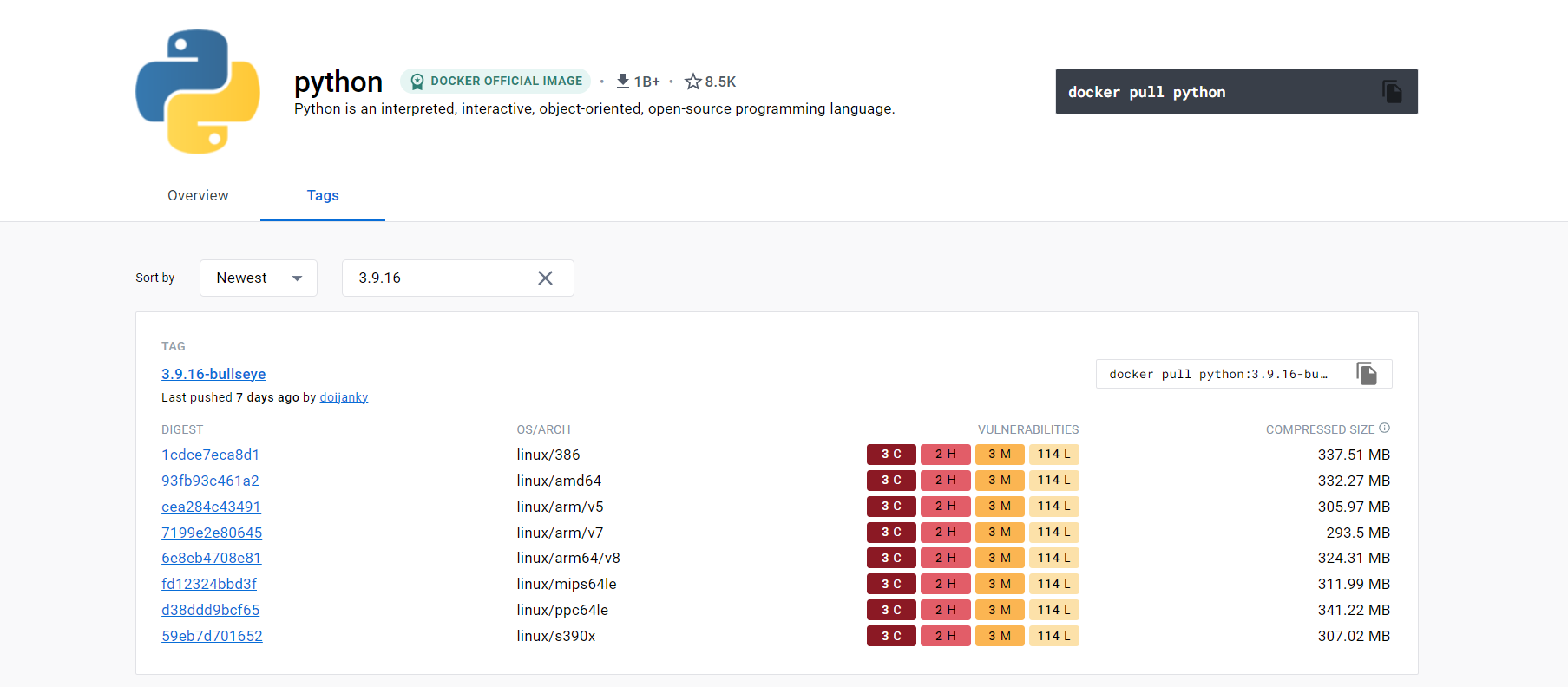

大部分镜像托管平台支持多平台镜像,比如 harbor,这意味着镜像仓库中单个标签可以包含不同平台的多个镜像,以 docker hub 的 python 镜像仓库为例,3.9.16 这个标签就包含了 8 个不同系统和架构的镜像(平台 = 系统 + 架构),通过 docker pull 或 docker run 拉取一个支持跨平台的镜像时,docker 会自动选择与当前运行平台相匹配的镜像。由于该特性的存在,在进行镜像的跨平台分发时,我们不需要对镜像的消费做任何处理,只需要关心镜像的生产,即如何构建跨平台的镜像。

buildx 安装

默认的 docker build 命令无法完成跨平台构建任务,我们需要为 docker 命令行安装 buildx 插件扩展其功能。buildx 能够使用由 Moby BuildKit 提供的构建镜像额外特性,它能够创建多个 builder 实例,在多个节点并行地执行构建任务,以及跨平台构建。

buildx 要求 Docker版本 19.03+ , 内核版本 kernel >= 4.8 ,(使用 $ uname -a 查看)才能支持 binfmt_misc 。

如果 docker 版本低于 19.03 没有内置 buildx 命令,可以下载二进制包手动安装:

- 首先从

Docker buildx项目的Release页面找到适合自己平台的二进制文件。 - 下载二进制文件到本地并重命名为

docker-buildx,移动到docker的插件目录~/.docker/cli-plugins。 - 向二进制文件授予可执行权限。

启用 buildx

docker 在 19.03 引入了一个新的特性,使得 docker 可以构建不同 cpu 体系结构的镜像,比如 arm 镜像,这是不必引入模拟器的情况下,docker自身所提供的原生统一构建机制,但是使用时需要进行设定才能进行使用。

有以下2种设定方法,推荐第二种。

$ export DOCKER_CLI_EXPERIMENTAL=enabled $ docker buildx version github.com/docker/buildx v0.7.1-docker 05846896d149da05f3d6fd1e7770da187b52a247

$ cat << EOF > ~/.docker/config.json

{

"experimental": "enabled"

}

EOF

$ docker buildx version

github.com/docker/buildx v0.7.1-docker 05846896d149da05f3d6fd1e7770da187b52a247

v20.10 版本开始,Docker CLI 所有实验特性的命令均默认开启,无需再进行配置或设置系统环境变量。

buildx 的三种跨平台构建策略

有三种跨平台构建策略可供选择,分别是

-

策略一通过QEMU的用户态模式创建轻量级的虚拟机,在虚拟机系统中构建镜像

QEMU通常用于模拟完整的操作系统,它还可以通过用户态模式运行:以binfmt_misc在宿主机系统中注册一个二进制转换处理程序, 并在程序运行时动态翻译二进制文件,根据需要将系统调用从目标CPU架构转换为当前系统的CPU架构。 最终的效果就像在一个虚拟机中运行目标CPU 架构的二进制文件。 这种方式不需要对已有的Dockerfile做任何修改,实现的成本很低,但显而易见效率并不高。提示:Docker 桌面版(MacOS 和 Windows) 内置了 QEMU 支持 -

策略二在一个builder实例中加入多个不同目标平台的节点,通过原生节点构建对应平台镜像

将不同系统架构的原生节点添加到builder实例中可以为跨平台编译带来更好的支持,而且效率更高,但需要有足够的基础设施支持。 -

策略三分阶段构建并且交叉编译到不同的目标架构

如果构建项目所使用的程序语言支持交叉编译(如C和Go),可以利用Dockerfile提供的分阶段构建特性, 首先在和构建节点相同的架构中编译出目标架构的二进制文件,再将这些二进制文件复制到目标架构的另一镜像中。 这种方式不需要额外的硬件,也能得到较好的性能,但只有特定编程语言能够实现。

启用 binfmt_misc

如果你使用的是 Docker 桌面版(MacOS 和 Windows) ,默认已经启用了 binfmt_misc ,可以跳过这一步。如果你使用的是 Linux ,需要手动启用 binfmt_misc 。有一个比较容易的办法,直接运行一个特权容器,容器里面写好了设置脚本。

以下操作是在高内核版本上进行的,binfmt_misc 要求内核版本至少 4.8+ ,这里使用的是如下版本

$ uname -r 5.10.134-13.al8.x86_64

$ docker run --rm --privileged tonistiigi/binfmt:latest --install all

查看已经支持的架构

$ ls -l /proc/sys/fs/binfmt_misc/

total 0

drwxr-xr-x 2 root root 0 Aug 8 12:21 .

dr-xr-xr-x 1 root root 0 Aug 8 12:20 ..

-rw-r--r-- 1 root root 0 Aug 8 15:56 qemu-aarch64

-rw-r--r-- 1 root root 0 Aug 8 15:56 qemu-arm

-rw-r--r-- 1 root root 0 Aug 8 15:56 qemu-mips64

-rw-r--r-- 1 root root 0 Aug 8 15:56 qemu-mips64el

-rw-r--r-- 1 root root 0 Aug 8 15:56 qemu-ppc64le

-rw-r--r-- 1 root root 0 Aug 8 15:56 qemu-riscv64

-rw-r--r-- 1 root root 0 Aug 8 15:56 qemu-s390x

--w------- 1 root root 0 Aug 8 12:21 register

-rw-r--r-- 1 root root 0 Aug 8 12:21 status

验证是否启用了相应的处理器

$ cat /proc/sys/fs/binfmt_misc/qemu-aarch64

enabled

interpreter /usr/bin/qemu-aarch64

flags: POCF

offset 0

magic 7f454c460201010000000000000000000200b700

mask ffffffffffffff00fffffffffffffffffeffffff

buildx 实例

docker buildx 通过 builder 实例对象来管理构建配置和节点,命令行将构建任务发送至 builder 实例,再由 builder 指派给符合条件的节点执行。我们可以基于同一个 docker 服务程序创建多个 builder 实例,提供给不同的项目使用以隔离各个项目的配置,也可以为一组远程 docker 节点创建一个 builder 实例组成构建阵列,并在不同阵列之间快速切换。

- 创建buildx实例, 默认支持所有平台

$ docker buildx create --name multi-builder multi-builder

- 查看

buildx实例,default是默认创建的实例,刚创建的multi-builder实例的默认节点multi-builder0处于inactive状态

$ docker buildx ls NAME/NODE DRIVER/ENDPOINT STATUS PLATFORMS multi-builder docker-container multi-builder0 unix:///var/run/docker.sock inactive default * docker default default running linux/amd64, linux/amd64/v2, linux/amd64/v3, linux/amd64/v4, linux/arm64, linux/riscv64, linux/ppc64le, linux/s390x, linux/386, linux/mips64le, linux/mips64, linux/arm/v7, linux/arm/v6

- 切换

multi-builder实例,可以看到*号转移了,表明当前构建的默认实例是multi-builder

$ docker buildx use multi-builder multi-builder$ docker buildx ls NAME/NODE DRIVER/ENDPOINT STATUS BUILDKIT PLATFORMS multi-builder * docker-container multi-builder0 unix:///var/run/docker.sock inactive default docker default default running v0.11.6+0a15675913b7 linux/amd64, linux/amd64/v2, linux/amd64/v3, linux/amd64/v4, linux/386, linux/arm64, linux/riscv64, linux/ppc64le, linux/s390x, linux/mips64le, linux/mips64, linux/arm/v7, linux/arm/v6

- 启动 multi-builder实例

$ docker buildx inspect multi-builder --bootstrap [+] Building 152.8s (1/1) FINISHED => [internal] booting buildkit 152.8s => => pulling image moby/buildkit:buildx-stable-1 151.6s => => creating container buildx_buildkit_mybuilder0 1.2s Name: multi-builder Driver: docker-containerNodes: Name: multi-builder0 Endpoint: unix:///var/run/docker.sock Status: running Platforms: linux/amd64, linux/amd64/v2, linux/amd64/v3, linux/amd64/v4, linux/arm64, linux/riscv64, linux/ppc64le, linux/s390x, linux/386, linux/mips64le, linux/mips64, linux/arm/v7, linux/arm/v6

- 验证实例启动,可以看到

multi-builder0已经是running状态

$ docker buildx ls NAME/NODE DRIVER/ENDPOINT STATUS BUILDKIT PLATFORMS multi-builder * docker-container multi-builder0 unix:///var/run/docker.sock running v0.12.1 linux/amd64, linux/amd64/v2, linux/amd64/v3, linux/amd64/v4, linux/arm64, linux/riscv64, linux/ppc64le, linux/s390x, linux/386, linux/mips64le, linux/mips64, linux/arm/v7, linux/arm/v6 default docker default default running v0.11.6+0a15675913b7 linux/amd64, linux/amd64/v2, linux/amd64/v3, linux/amd64/v4, linux/386, linux/arm64, linux/riscv64, linux/ppc64le, linux/s390x, linux/mips64le, linux/mips64, linux/arm/v7, linux/arm/v6

提示: 可以在 create 或 inspect 子命令中添加 –bootstrap 选项立即启动实例,一旦启动,可以看到容器 buildx_buildkit_multi-builder0 处于运行状态

$ docker ps | grep moby 4e822c83d3cf moby/buildkit:buildx-stable-1 "buildkitd" 2 weeks ago Up 2 weeks buildx_buildkit_multi-builder0

这里指定2个节点, 一个是 amd64 平台, 一个是 arm64 平台。

注意: 两个节点需要开启 docker 的 http 访问权限, 彼此需要通信,增加参数 -H tcp://0.0.0.0:2375,修改如下:

$ cat /usr/lib/systemd/system/docker.service [Service] ... ExecStart=/usr/bin/dockerd -H fd:// -H tcp://0.0.0.0:2375 --containerd=/run/containerd/containerd.sock$ systemctl daemon-reload $ systemctl restart docker

- 创建实例并增加一个 X86

amd64架构的节点

$ export DOCKER_HOST=tcp://10.70.13.13:2375 $ docker buildx create --name remote-builder --driver docker-container --platform linux/amd64 --node node1 remote-builder

- 追加另一个

arm64架构的节点

$ export DOCKER_HOST=tcp://10.70.206.212:2375 $ docker buildx create --name remote-builder --driver docker-container --platform linux/arm64 --append --node node2 remote-builder

- 切换到

remote-builder实例,已经有2个节点添加其中

$ docker buildx use remote-builder $ docker buildx ls docker buildx ls NAME/NODE DRIVER/ENDPOINT STATUS BUILDKIT PLATFORMS multi-builder docker-container multi-builder0 unix:///var/run/docker.sock stopped remote-builder * docker-container node1 tcp://10.70.13.13:2375 running v0.12.1 linux/amd64*, linux/amd64/v2, linux/amd64/v3, linux/amd64/v4, linux/arm64, linux/riscv64, linux/ppc64le, linux/s390x, linux/386, linux/mips64le, linux/mips64, linux/arm/v7, linux/arm/v6 node2 tcp://10.70.206.212:2375 running v0.12.1 linux/arm64*, linux/arm/v7, linux/arm/v6 default docker default default running v0.11.6+0a15675913b7 linux/arm64, linux/arm/v7, linux/arm/v6

- 启动,该操作将向两个节点的

docker进程进行部署buildkit容器服务,用于接受构建任务

$ docker buildx inspect --bootstrap remote-builder Name: remote-builder Driver: docker-container Last Activity: 2023-09-01 07:47:49 +0000 UTCNodes: Name: node1 Endpoint: tcp://10.70.13.13:2375 Status: running Buildkit: v0.12.1 Platforms: linux/amd64*, linux/amd64/v2, linux/amd64/v3, linux/amd64/v4, linux/arm64, linux/riscv64, linux/ppc64le, linux/s390x, linux/386, linux/mips64le, linux/mips64, linux/arm/v7, linux/arm/v6 Labels: org.mobyproject.buildkit.worker.executor: oci org.mobyproject.buildkit.worker.hostname: 2ab6d670ae41 org.mobyproject.buildkit.worker.network: host org.mobyproject.buildkit.worker.oci.process-mode: sandbox org.mobyproject.buildkit.worker.selinux.enabled: false org.mobyproject.buildkit.worker.snapshotter: overlayfs GC Policy rule#0: All: false Filters: type==source.local,type==exec.cachemount,type==source.git.checkout Keep Duration: 48h0m0s Keep Bytes: 488.3MiB GC Policy rule#1: All: false Keep Duration: 1440h0m0s Keep Bytes: 9.313GiB GC Policy rule#2: All: false Keep Bytes: 9.313GiB GC Policy rule#3: All: true Keep Bytes: 9.313GiB

Name: node2 Endpoint: tcp://10.70.206.212:2375 Status: running Buildkit: v0.12.1 Platforms: linux/arm64*, linux/arm/v7, linux/arm/v6 Labels: org.mobyproject.buildkit.worker.executor: oci org.mobyproject.buildkit.worker.hostname: aedb6dc65ad6 org.mobyproject.buildkit.worker.network: host org.mobyproject.buildkit.worker.oci.process-mode: sandbox org.mobyproject.buildkit.worker.selinux.enabled: false org.mobyproject.buildkit.worker.snapshotter: overlayfs GC Policy rule#0: All: false Filters: type==source.local,type==exec.cachemount,type==source.git.checkout Keep Duration: 48h0m0s Keep Bytes: 488.3MiB GC Policy rule#1: All: false Keep Duration: 1440h0m0s Keep Bytes: 9.313GiB GC Policy rule#2: All: false Keep Bytes: 9.313GiB GC Policy rule#3: All: true Keep Bytes: 9.313GiB

提示: 主动指定远程节点的架构平台, 构建时可以将任务做精确的分发,号表明节点支持多种结构或分支编译,不指定架构的情况下默认按照标记的工作

移除节点的命令:

$ docker buildx create --name remote-builder --leave --node node2

构建驱动

buildx 实例通过两种方式来执行构建任务,两种执行方式被称为使用不同的驱动:

docker驱动:使用 Docker 服务程序中集成的 BuildKit 库执行构建。docker-container驱动:启动一个包含 BuildKit 的容器并在容器中执行构建。

docker驱动 无法使用一小部分 buildx 的特性(如在一次运行中同时构建多个平台镜像),此外在镜像的默认输出格式上也有所区别:docker驱动 默认将构建结果以 Docker 镜像格式直接输出到 docker 的镜像目录(通常是 /var/lib/overlay2),之后执行 docker images 命令可以列出所输出的镜像;而 docker container 则需要通过 --output 选项指定输出格式为镜像或其他格式,为了一次性构建多个平台的镜像,一般使用 docker container 作为默认的 builder 实例驱动并将结果直接推送到镜像仓库。

构建案例

buildx 内置的变量如下:

| 变量 | 描述 |

|---|---|

TARGETPLATFORM

|

构建镜像的目标平台,例如 linux/amd64, linux/arm/v7, windows/amd64 |

TARGETOS

|

TARGETPLATFORM 的 OS 类型,例如 linux, windows |

TARGETARCH

|

TARGETPLATFORM 的架构类型,例如 amd64, arm |

TARGETVARIANT

|

TARGETPLATFORM 的变种,该变量可能为空,例如 v7 |

BUILDPLATFORM

|

构建镜像主机平台,例如 linux/amd64 |

BUILDOS

|

BUILDPLATFORM 的 OS 类型,例如 linux |

BUILDARCH

|

BUILDPLATFORM 的架构类型,例如 amd64 |

BUILDVARIANT

|

BUILDPLATFORM 的变种,该变量可能为空,例如 v7 |

为了方便演示,选用如下 go 代码作验证, 打印所属运行机器的架构

package main

import (

"fmt"

"runtime"

)

func main() {

fmt.Println("Hello world!")

fmt.Printf("Running in [%s] architecture.\n", runtime.GOARCH)

}

Dockerfile 采用两阶段编译

FROM golang:1.18 as builder

ARG TARGETARCH

ARG TARGETVARIANT

WORKDIR /app

COPY main.go /app/main.go

RUN GOOS=linux GOARCH=$TARGETARCH GOARM=$TARGETVARIANT go build -a -o output/main main.go

FROM alpine:latest

WORKDIR /root

COPY --from=builder /app/output/main .

CMD /root/main

开始构建

交叉编译如下,镜像直接推送至仓库

$ docker buildx use multi-builder $ docker buildx build --provenance=false --platform linux/amd64,linux/386,linux/arm64,linux/arm --push -t 192.168.32.135:9001/multi/arch-demo:v1.0 -o type=registry . [+] Building 871.1s (30/30) FINISHED => [internal] load build definition from Dockerfile 0.0s => => transferring dockerfile: 367B 0.0s => [internal] load .dockerignore 0.0s => => transferring context: 2B 0.0s => [linux/amd64 internal] load metadata for docker.io/library/golang:1.18 13.8s => [linux/386 internal] load metadata for docker.io/library/alpine:latest 13.5s => [linux/arm/v7 internal] load metadata for docker.io/library/alpine:latest 13.4s => [linux/amd64 internal] load metadata for docker.io/library/alpine:latest 13.4s => [linux/arm64 internal] load metadata for docker.io/library/alpine:latest 13.1s => [internal] load build context 0.0s => => transferring context: 260B 0.0s => [linux/386 stage-1 1/3] FROM docker.io/library/alpine:latest@sha256:69665d02cb32192e52e07644d76bc6f25abeb5410edc1c7a81a10ba3f0efb90a 23.2s => => resolve docker.io/library/alpine:latest@sha256:69665d02cb32192e52e07644d76bc6f25abeb5410edc1c7a81a10ba3f0efb90a 0.0s => => sha256:b3e346c15c2377ec0e75cc9efa98baecd58b0e9bb92f0ec07eeda0bcc7e67fc7 3.41MB / 3.41MB 23.0s => => extracting sha256:b3e346c15c2377ec0e75cc9efa98baecd58b0e9bb92f0ec07eeda0bcc7e67fc7 0.2s => [linux/amd64 builder 1/4] FROM docker.io/library/golang:1.18@sha256:50c889275d26f816b5314fc99f55425fa76b18fcaf16af255f5d57f09e1f48da 828.6s => => resolve docker.io/library/golang:1.18@sha256:50c889275d26f816b5314fc99f55425fa76b18fcaf16af255f5d57f09e1f48da 0.0s => => sha256:cc7973a07a5b4a44399c5d36fa142f37bb343bb123a3736357365fd9040ca38a 156B / 156B 1.6s => => sha256:06d0c5d18ef41fa1c2382bd2afd189a01ebfff4910b868879b6dcfeef46bc003 141.98MB / 141.98MB 757.0s => => sha256:9bd150679dbdb02d9d4df4457d54211d6ee719ca7bc77747a7be4cd99ae03988 54.58MB / 54.58MB 358.9s => => sha256:bfcb68b5bd105d3f88a2c15354cff6c253bedc41d83c1da28b3d686c37cd9103 85.98MB / 85.98MB 788.7s => => sha256:56261d0e6b05ece42650b14830960db5b42a9f23479d868256f91d96869ac0c2 10.88MB / 10.88MB 151.3s => => sha256:f049f75f014ee8fec2d4728b203c9cbee0502ce142aec030f874aa28359e25f1 5.16MB / 5.16MB 72.1s => => sha256:bbeef03cda1f5d6c9e20c310c1c91382a6b0a1a2501c3436b28152f13896f082 55.03MB / 55.03MB 490.1s => => extracting sha256:bbeef03cda1f5d6c9e20c310c1c91382a6b0a1a2501c3436b28152f13896f082 2.4s => => extracting sha256:f049f75f014ee8fec2d4728b203c9cbee0502ce142aec030f874aa28359e25f1 0.3s => => extracting sha256:56261d0e6b05ece42650b14830960db5b42a9f23479d868256f91d96869ac0c2 0.3s => => extracting sha256:9bd150679dbdb02d9d4df4457d54211d6ee719ca7bc77747a7be4cd99ae03988 2.6s => => extracting sha256:bfcb68b5bd105d3f88a2c15354cff6c253bedc41d83c1da28b3d686c37cd9103 2.8s => => extracting sha256:06d0c5d18ef41fa1c2382bd2afd189a01ebfff4910b868879b6dcfeef46bc003 7.6s => => extracting sha256:cc7973a07a5b4a44399c5d36fa142f37bb343bb123a3736357365fd9040ca38a 0.0s => [linux/arm64 stage-1 1/3] FROM docker.io/library/alpine:latest@sha256:69665d02cb32192e52e07644d76bc6f25abeb5410edc1c7a81a10ba3f0efb90a 47.0s => => resolve docker.io/library/alpine:latest@sha256:69665d02cb32192e52e07644d76bc6f25abeb5410edc1c7a81a10ba3f0efb90a 0.0s => => sha256:af6eaf76a39c2d3e7e0b8a0420486e3df33c4027d696c076a99a3d0ac09026af 3.26MB / 3.26MB 46.8s => => extracting sha256:af6eaf76a39c2d3e7e0b8a0420486e3df33c4027d696c076a99a3d0ac09026af 0.2s => [linux/arm/v7 stage-1 1/3] FROM docker.io/library/alpine:latest@sha256:69665d02cb32192e52e07644d76bc6f25abeb5410edc1c7a81a10ba3f0efb90a 29.6s => => resolve docker.io/library/alpine:latest@sha256:69665d02cb32192e52e07644d76bc6f25abeb5410edc1c7a81a10ba3f0efb90a 0.0s => => sha256:6fb81ff47bd6d7db0ed86c9b951ad6417ec73ab60af6d22daa604076a902629c 2.87MB / 2.87MB 29.5s => => extracting sha256:6fb81ff47bd6d7db0ed86c9b951ad6417ec73ab60af6d22daa604076a902629c 0.1s => [linux/amd64 stage-1 1/3] FROM docker.io/library/alpine:latest@sha256:69665d02cb32192e52e07644d76bc6f25abeb5410edc1c7a81a10ba3f0efb90a 24.0s => => resolve docker.io/library/alpine:latest@sha256:69665d02cb32192e52e07644d76bc6f25abeb5410edc1c7a81a10ba3f0efb90a 0.0s => => sha256:63b65145d645c1250c391b2d16ebe53b3747c295ca8ba2fcb6b0cf064a4dc21c 3.37MB / 3.37MB 23.8s => => extracting sha256:63b65145d645c1250c391b2d16ebe53b3747c295ca8ba2fcb6b0cf064a4dc21c 0.2s => [linux/386 stage-1 2/3] WORKDIR /root 0.0s => [linux/amd64 stage-1 2/3] WORKDIR /root 0.0s => [linux/arm/v7 stage-1 2/3] WORKDIR /root 0.0s => [linux/arm64 stage-1 2/3] WORKDIR /root 0.0s => [linux/amd64 builder 2/4] WORKDIR /app 10.4s => [linux/amd64 builder 3/4] COPY main.go /app/main.go 0.0s => [linux/amd64 builder 4/4] RUN GOOS=linux GOARCH=386 go build -a -o output/main main.go 14.3s => [linux/amd64 builder 4/4] RUN GOOS=linux GOARCH=amd64 go build -a -o output/main main.go 14.5s => [linux/amd64 builder 4/4] RUN GOOS=linux GOARCH=arm64 go build -a -o output/main main.go 14.3s => [linux/amd64 builder 4/4] RUN GOOS=linux GOARCH=arm go build -a -o output/main main.go 14.5s => [linux/386 stage-1 3/3] COPY --from=builder /app/output/main . 0.0s => [linux/arm64 stage-1 3/3] COPY --from=builder /app/output/main . 0.0s => [linux/arm/v7 stage-1 3/3] COPY --from=builder /app/output/main . 0.0s => [linux/amd64 stage-1 3/3] COPY --from=builder /app/output/main . 0.0s => exporting to image 3.5s => => exporting layers 0.3s => => exporting manifest sha256:0b35bdcec00d77858b30cb13f746edffe4fb97e87489bda6e2065aeaef56ae59 0.0s => => exporting config sha256:c6c9d06298f0694cf879436dc2694babea557052ebb20ad18411e5c81401a074 0.0s => => exporting manifest sha256:584e428681f0c4a486cbc39d8a89a42e651f7227cf4f8328d822a2973f9832b0 0.0s => => exporting config sha256:6e1969a55f770198a4e9a94c041398091e83e1754df2b6876934c511b581f9ba 0.0s => => exporting manifest sha256:f3c20899d6be217045b5663dcf153c196d85a05d65633d11eb9915e8c05cdff9 0.0s => => exporting config sha256:57deed33a224b0d32e1c8124f237861ef9831dc92508e05a28f97b0482d8dfd1 0.0s => => exporting manifest sha256:fa4d813e77ce265a8fdcd662ffb254b54e0f93389859c486fcf2d50a3cbb009d 0.0s => => exporting config sha256:301cecf68f15bac0a476327001732c16ed78ad4a95487b41251206e5e6a91d45 0.0s => => exporting manifest list sha256:4c801f3a5742becc5389d10ca0a9e0afbea33552924aa15e8ebb09f1ea2acfa2 0.0s => => pushing layers 2.7s => => pushing manifest for 192.168.32.135:9001/multi/arch-demo:v1.0@sha256:4c801f3a5742becc5389d10ca0a9e0afbea33552924aa15e8ebb09f1ea2acfa2 0.5s => [auth] multi/arch-demo:pull,push token for 192.168.32.135:9001 0.0s => [auth] multi/arch-demo:pull,push token for 192.168.32.135:9001

指定 remote-builder 实例进行此次构建,结果直接推送至远程镜像

$ docker buildx use remote-builder $ docker buildx build --provenance=false --platform linux/amd64,linux/arm64 --push -t 192.168.32.135:9001/multi/arch-demo:v2.0-remote -o type=registry . [+] Building 777.6s (28/28) FINISHED => [internal] load build definition from Dockerfile 0.0s => => transferring dockerfile: 366B 0.0s => [internal] load .dockerignore 0.0s => => transferring context: 2B 0.0s => [linux/amd64 internal] load metadata for docker.io/library/alpine:latest 3.3s => [linux/amd64 internal] load metadata for docker.io/library/golang:1.18 3.3s => [internal] load build definition from Dockerfile 0.2s => => transferring dockerfile: 366B 0.1s => [internal] load .dockerignore 0.1s => => transferring context: 2B 0.1s => [linux/arm64 internal] load metadata for docker.io/library/alpine:latest 4.3s => [linux/arm64 internal] load metadata for docker.io/library/golang:1.18 3.6s => [linux/amd64 builder 1/4] FROM docker.io/library/golang:1.18@sha256:50c889275d26f816b5314fc99f55425fa76b18fcaf16af255f5d57f09e1f48da 764.1s => => resolve docker.io/library/golang:1.18@sha256:50c889275d26f816b5314fc99f55425fa76b18fcaf16af255f5d57f09e1f48da 0.0s => => sha256:06d0c5d18ef41fa1c2382bd2afd189a01ebfff4910b868879b6dcfeef46bc003 141.98MB / 141.98MB 752.9s => => sha256:bfcb68b5bd105d3f88a2c15354cff6c253bedc41d83c1da28b3d686c37cd9103 85.98MB / 85.98MB 721.7s => => sha256:9bd150679dbdb02d9d4df4457d54211d6ee719ca7bc77747a7be4cd99ae03988 54.58MB / 54.58MB 616.9s => => sha256:f049f75f014ee8fec2d4728b203c9cbee0502ce142aec030f874aa28359e25f1 5.16MB / 5.16MB 38.7s => => sha256:bbeef03cda1f5d6c9e20c310c1c91382a6b0a1a2501c3436b28152f13896f082 55.03MB / 55.03MB 369.7s => => extracting sha256:bbeef03cda1f5d6c9e20c310c1c91382a6b0a1a2501c3436b28152f13896f082 2.5s => => extracting sha256:f049f75f014ee8fec2d4728b203c9cbee0502ce142aec030f874aa28359e25f1 0.4s => => extracting sha256:56261d0e6b05ece42650b14830960db5b42a9f23479d868256f91d96869ac0c2 0.3s => => extracting sha256:9bd150679dbdb02d9d4df4457d54211d6ee719ca7bc77747a7be4cd99ae03988 3.0s => => extracting sha256:bfcb68b5bd105d3f88a2c15354cff6c253bedc41d83c1da28b3d686c37cd9103 3.0s => => extracting sha256:06d0c5d18ef41fa1c2382bd2afd189a01ebfff4910b868879b6dcfeef46bc003 11.1s => => extracting sha256:cc7973a07a5b4a44399c5d36fa142f37bb343bb123a3736357365fd9040ca38a 0.0s => [internal] load build context 0.0s => => transferring context: 89B 0.0s => [linux/amd64 stage-1 1/3] FROM docker.io/library/alpine:latest@sha256:69665d02cb32192e52e07644d76bc6f25abeb5410edc1c7a81a10ba3f0efb90a 0.0s => => resolve docker.io/library/alpine:latest@sha256:69665d02cb32192e52e07644d76bc6f25abeb5410edc1c7a81a10ba3f0efb90a 0.0s => CACHED [linux/amd64 stage-1 2/3] WORKDIR /root 0.0s => [linux/arm64 builder 1/4] FROM docker.io/library/golang:1.18@sha256:50c889275d26f816b5314fc99f55425fa76b18fcaf16af255f5d57f09e1f48da 0.1s => => resolve docker.io/library/golang:1.18@sha256:50c889275d26f816b5314fc99f55425fa76b18fcaf16af255f5d57f09e1f48da 0.1s => [internal] load build context 0.1s => => transferring context: 89B 0.0s => [linux/arm64 stage-1 1/3] FROM docker.io/library/alpine:latest@sha256:69665d02cb32192e52e07644d76bc6f25abeb5410edc1c7a81a10ba3f0efb90a 0.2s => => resolve docker.io/library/alpine:latest@sha256:69665d02cb32192e52e07644d76bc6f25abeb5410edc1c7a81a10ba3f0efb90a 0.2s => CACHED [linux/arm64 stage-1 2/3] WORKDIR /root 0.0s => CACHED [linux/arm64 builder 2/4] WORKDIR /app 0.0s => CACHED [linux/arm64 builder 3/4] COPY main.go /app/main.go 0.0s => CACHED [linux/arm64 builder 4/4] RUN GOOS=linux GOARCH=arm64 go build -a -o output/main main.go 0.0s => CACHED [linux/arm64 stage-1 3/3] COPY --from=builder /app/output/main . 0.0s => exporting to image 0.9s => => exporting layers 0.0s => => exporting manifest sha256:5d5f52225b35a18f0fc2d2dd9134cf82e7d7e55e9680de160105e828b5da445e 0.0s => => exporting config sha256:c756024ca88012423242bdba92ac526501af4ee6ca7f876e7378c47c019a15b4 0.0s => => exporting attestation manifest sha256:5722da0ca7661a1bfc6fe98ec2168280282f373c3209412417bdd54f642bbd72 0.1s => => exporting manifest list sha256:26c0503dab2e58fdb8f8462f7e3f711b4eb2694a672b86f395654c46580e72f0 0.1s => => pushing layers 0.3s => => pushing manifest for hub-test.quanshi.com/multi/arch-demo 0.4s => [linux/amd64 builder 2/4] WORKDIR /app 0.8s => [linux/amd64 builder 3/4] COPY main.go /app/main.go 0.2s => [linux/amd64 builder 4/4] RUN GOOS=linux GOARCH=amd64 go build -a -o output/main main.go 5.5s => [linux/amd64 stage-1 3/3] COPY --from=builder /app/output/main . 0.0s => exporting to image 2.0s => => exporting layers 0.1s => => exporting manifest sha256:2e10d4fa2d37639d0ed96ccfbb079c68ffc3c7a9619820ab0f60b35e128434eb 0.0s => => exporting config sha256:30b481817c5066bf078816347ed3bc5d521fc68bd7182f4b62c7c70b68617088 0.0s => => exporting attestation manifest sha256:3fee00439ea50d3769df0f6fd00087908c9a32e6c8d9d23a8112ca00b4bb6fb4 0.0s => => exporting manifest list sha256:7b6559e86f9f67ebb710accf5d4bcff0f28bb03c2581189dc668c95ed42ccb4d 0.0s => => pushing layers 1.5s => => pushing manifest for 192.168.32.135:9001/multi/arch-demo 0.3s => [auth] multi/arch-demo:pull,push token for 192.168.32.135:9001.com 0.0s => merging manifest list 192.168.32.135:9001/multi/arch-demo:v2.0-remote

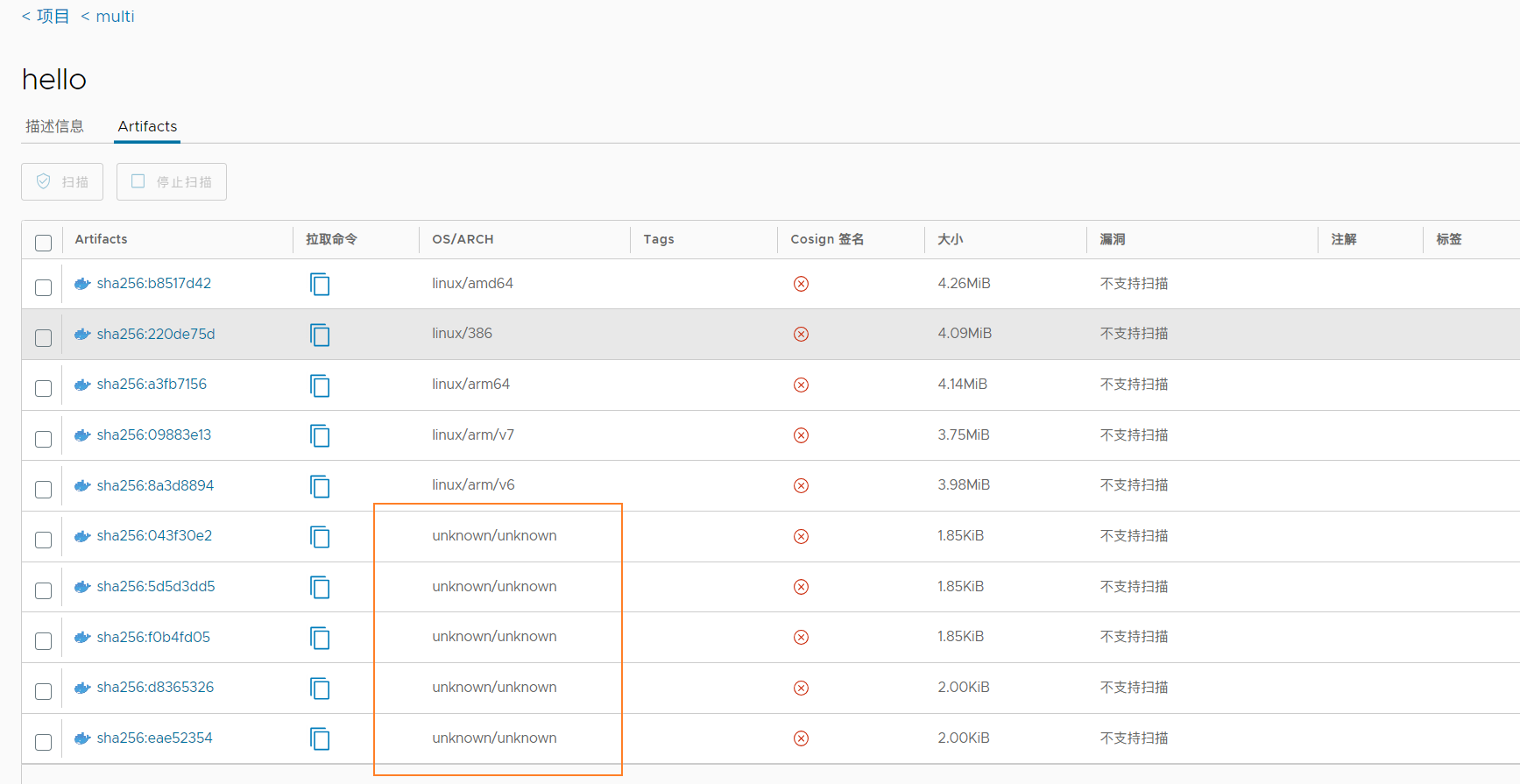

高版本的 buildx 支持 attestations 参数,

(BuildKit version >=0.11 and Buildx version >=0.10)

,作用是新的镜像签名方式,供应链说明。

就是镜像提供方的一种元信息,用于溯源 和 配合镜像扫描策略,是新提出的安全标准。默认打开,

由于harbor在 v2.7 版本才能支持该特性显示,所以如果不关闭会造成显示Bug

如下图(os/arch显示 unknown/unknown)。建议构建时关闭该特性

$ docker buildx build --provenance=false

$ export BUILDX_NO_DEFAULT_ATTESTATIONS=1

其他说明

docker buildx 构建出多架构镜像,无法存储在本地,也不能通过 docker images看到,因此一般是直接推送至仓库。

还有一种制作多架构镜像的方法是先本地构建出镜像,然后利用docker命令操作去合并镜像,见其他博文。

参考

- buildx关于attestations参数解释

- harbor显示Bug问题已合并至1.27版本

如未另行说明,那么本页面中的内容已根据 知识共享署名 4.0 许可 获得了许可,并且代码示例已根据 Apache 2.0 许可 获得了许可。内容随着时间推移,可能会过期,会定时更新或移除一些旧的内容。

最后更新时间 (CST):2023-08-21

阅读更多

访问本博文的相关主题 容器技术 以获取更多的精彩内容。